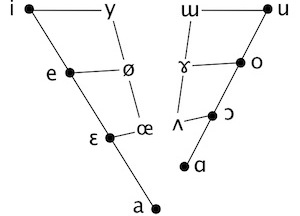

The vowel space

Vowels can be tricky to describe phonetically because they are points, or rather areas, within a continuous space. Any language will have a certain finite number of contrasting vowels, each of which may be represented with a discrete alphabetic symbol; but phonetically each will correspond to a range of typical values, and between any two actual vowel sounds there is a gradient continuum.

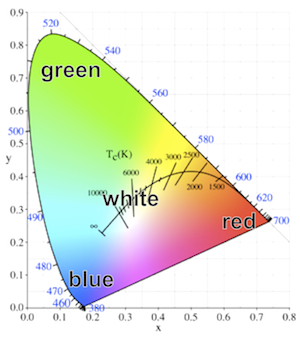

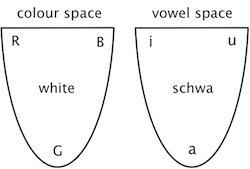

A good analogy can be made with colour. Here is the widely-used chromaticity diagram of the Commission International de L’Eclairage (CIE): The rounded V-shape of the diagram defines the perimeter of perceptible colour, running between three ‘corner’ colours, red, green and blue. There is a continuous space between these three colours, and also between the corner trio and the central area corresponding to white.

The rounded V-shape of the diagram defines the perimeter of perceptible colour, running between three ‘corner’ colours, red, green and blue. There is a continuous space between these three colours, and also between the corner trio and the central area corresponding to white.

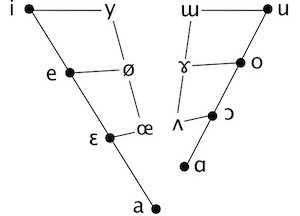

Similarly, the vowel space can be diagrammed as a rounded V-shape with three ‘corner’ vowels, namely i, a, u. There is a continuous space between these three vowels, and also between the corner trio and the ‘colourless’ central area corresponding to schwa. Schematically: Languages make different categorizations of both the colour space and the vowel space. For instance, many languages (eg Vietnamese) have a single word to cover the part of the colour space which English divides into green and blue. On the other hand, English has only one basic term blue for a space which Russian and Italian divide into two: Russian sinij and Italian blu correspond to what English has to call ‘dark blue’ while goluboj and azzurro correspond to ‘light blue’. Similarly with vowel categorizations: the part of the vowel space where Japanese and Spanish have only e is divided by Italian and Yoruba into two, namely e and ɛ.

Languages make different categorizations of both the colour space and the vowel space. For instance, many languages (eg Vietnamese) have a single word to cover the part of the colour space which English divides into green and blue. On the other hand, English has only one basic term blue for a space which Russian and Italian divide into two: Russian sinij and Italian blu correspond to what English has to call ‘dark blue’ while goluboj and azzurro correspond to ‘light blue’. Similarly with vowel categorizations: the part of the vowel space where Japanese and Spanish have only e is divided by Italian and Yoruba into two, namely e and ɛ.

The triangularity of the chromaticity diagram reflects the three kinds of photoreceptors (cones) in the retina, which respond differently to different parts of the visible spectrum. The triangularity of the vowel space reflects our sensitivity to different patterns in the acoustic spectrum: u is characterized by resonances (also called formants) in the lower part of the vowel range, a by resonances in the mid range, and i by a combination of high and low resonances.

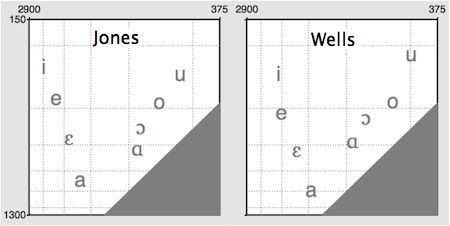

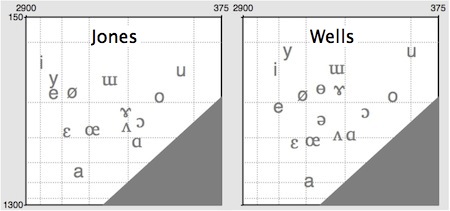

The two-dimensional colour space diagram is derived in a rather complex manner, but a two-dimensional vowel space diagram can be created fairly simply by plotting the lowest resonance (also called the ‘first formant’ or ‘F1’) against the second-lowest resonance (also called the ‘second formant’ or ‘F2’). Here are the primary cardinal vowels as recorded by Daniel Jones and John Wells:

(F1 is plotted from top to bottom, F2 from right to left; the scales are logarithmic, and the numbers are frequency values in Hertz. The bottom right corner is greyed out because, by definition, F1 may not be higher than F2.)

(F1 is plotted from top to bottom, F2 from right to left; the scales are logarithmic, and the numbers are frequency values in Hertz. The bottom right corner is greyed out because, by definition, F1 may not be higher than F2.)

The broadly triangular shape of the vowel space is evident for both speakers, though their absolute values differ. These discrepancies are due partly to natural differences in the size and shape of the speakers’ vocal tracts, and partly to variations in the precise realizations of the sounds: John’s [u], for instance, is rather extreme.

Today it’s remarkably straightforward to produce such plots from sound recordings. Thanks to technological developments and the public-spirited work of brilliant scientist-programmers like Paul Boersma and Mark Huckvale, many wonderful apps are freely available. Boersma’s industry-standard Praat can be downloaded for free in versions for both Mac and PC.

Early phoneticians were not so lucky. Unable to measure actual speech sounds, they were thrown back on whatever visual and tactile (or ‘proprioceptive’) impressions they could gain of what the body was doing to produce the sounds. One of the great pioneers was Alexander Melville Bell, father of the telephone’s inventor Alexander Graham Bell (father and son gave phonetic lecture-demonstrations at UCL in the mid-nineteenth century). Bell had the idea of describing vowels in terms of associated tongue configurations.

Bell classified vowels in terms of two dimensions, based on his estimation of how high or low the tongue was positioned, and how front or back in the mouth. Before long, phoneticians had widely adopted this view of the vowel space as a tongue space, and even today Bell’s terms high/low and front/back are in general use as vowel classifiers. (This is also why we conventionally plot the F1 minimum at the top of the vowel space, this resonance tending to be inversely related to estimated tongue height.)

However, as Peter Ladefoged puts it in his Vowels and Consonants:

These early phoneticians were much like astronomers before Galileo… [who] thought that the sun went round the earth every 24 hours, and that most stars did the same… The observations of the early astronomers were wonderful. They could predict the apparent movements of the planets fairly well. These astronomers were certain they were describing how the stars and planets went around the earth. But they were not. The same is true of the early phoneticians. They thought they were describing the highest point of the tongue, but they were not. They were actually describing formant frequencies.

As early as 1928, American speech scientist George Oscar Russell published an x-ray study which undermined the notion of the vowel space as a tongue space. In Russell’s words, “phoneticians are thinking in terms of acoustic fact, and using physiological fantasy to express the idea”.

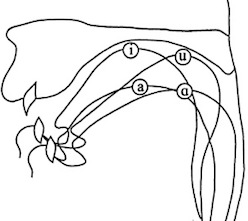

So it may come as a slight shock to be reminded that the International Phonetic Association still promulgates the ‘pre-Galilean’ view of the vowel space as a tongue space. The Handbook of the IPA (Cambridge University Press, 1999) begins its section on vowels with this cross-section of the vocal tract

and explains that

and explains that

joining the circles representing the highest point of the tongue in these four extreme vowels gives the boundary of the space within which vowels can be produced. For the purposes of vowel description this space can be stylized as the quadrilateral [on the IPA chart]

The Handbook concedes that, in order to gauge intermediate positions between these tongue extremities, it’s necessary to listen to the actual sounds. This follows Daniel Jones, whose Outline of English Phonetics (9th ed., 1960) refers vaguely to the “resonance chambers” which determine vowel quality, and states for the primary cardinal vowels that

the degrees of acoustic separation between each vowel and the next are equal, or, rather, as nearly equal as it is possible for a person with a well-trained ear to make them.

Interestingly, Ladefoged tells us in his informal CV that

Jones never defined what he meant by saying that the cardinal vowels were acoustically equidistant. He thought that the tongue made equal movements between each of them, even after the publication of x-ray views of the 8 primary cardinal vowels produced by his colleague Stephen Jones [no relative] showed that this was not the case (Jones, 1929). Daniel Jones himself published photographs of only four of his own cardinal vowels, although, as he told me in 1955, he had photographs of all 8 vowels. When I asked him why he had not published the other four photographs, he smiled and said ‘People would have found them too confusing’.

The IPA Handbook prefers to talk of “abstraction”:

The use of auditory spacing in the definition of these vowels means vowel description is not based purely on articulation, and is one reason why the vowel quadrilateral must be regarded as an abstraction and not a direct mapping of tongue position.

Nonetheless, the obsolete articulatory view of the vowel space is still presented to students with depressing regularity.

So Daniel Jones’s primary cardinal vowels [i e ɛ a ɑ ɔ o u] simply mapped the outline of the auditory-acoustic vowel space, the articulation of this outline requiring complex adjustments of the jaw, tongue and the lips.

The articulatory view led to the invention of the so-called ‘secondary cardinal vowels’ – the primary cardinals articulated with reversed lip positions. The plotting of these on the periphery of the quadrilateral means that the lips are factored out of the diagram. This reduces it to a tongue space, and eliminates the auditory-acoustic underpinnings which had motivated it (overtly or not) in the first place.

It is this tongue space (albeit stylized or ‘abstract’) which is perpetuated on the IPA chart: any point on it indicates an articulation which can be either rounded or unrounded. From the acoustic point of view, this doesn’t make sense: if you change a vowel’s lip posture then you change the sound, and therefore its position in acoustic space. In particular, if you reverse the lip positions of the primary cardinal vowels, the resultant sounds are all centralized – except for [ɒ], which is an acoustically peripheral vowel somewhere between [ɑ] and [ɔ].

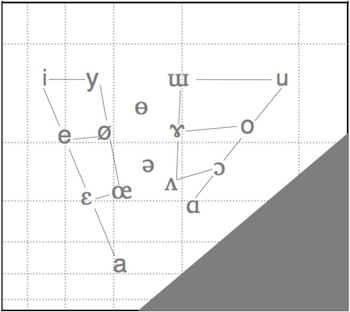

Here again are the Jones and Wells acoustic vowel spaces, plotted as before by means of Praat’s Vowel Editor, but now augmented with some additional vowels to show how they fill out the space within the periphery defined by the primary cardinals:

According to the first sentence of the IPA Handbook, the aim of the Association is “to promote the study of the science of phonetics and the various practical applications of that science.” In that spirit, I thought it might be worth sketching out a vowel chart based on acoustic science rather than “physiological fantasy”. By averaging Jones’s and Wells’s formant values, and then adjusting these to interpret fairly literally Jones’s notion of acoustic equidistance, I came up with a tentative set of values which can be plotted as follows (with some connecting lines as a visual aid):

According to the first sentence of the IPA Handbook, the aim of the Association is “to promote the study of the science of phonetics and the various practical applications of that science.” In that spirit, I thought it might be worth sketching out a vowel chart based on acoustic science rather than “physiological fantasy”. By averaging Jones’s and Wells’s formant values, and then adjusting these to interpret fairly literally Jones’s notion of acoustic equidistance, I came up with a tentative set of values which can be plotted as follows (with some connecting lines as a visual aid):

The Praat Vowel Editor can generate basic synthetic vowels at any point on the chart. If you click on the symbols in the chart above, you will hear a synthesized vowel with the corresponding F1 and F2 values. (For each vowel, I have also used the Editor to make appropriate adjustments to the higher resonances F3 and F4. While F1 and F2 are adequate for differentiating the vowels on the chart, higher formants make syntheses more natural, F3 making a particular contribution to the auditory “rounding” of the closer front vowels.)

In principle, much higher-quality, genuinely natural-sounding syntheses could be produced for these (or other) qualities, providing standardized objective reference vowels, much as the colours of traffic and aviation signals are given standardized chromaticity specifications. Speech synthesis allows reliable equidistant spacing, and such reference vowels could replace the inevitably variable vowel demonstrations which have been recorded by different phoneticians from Jones onwards. Note that it is the shape of the space – roughly, the ratios of the formant values – which matters more than precise absolute frequencies; the space could be normalized and adjusted to synthesize female or child speech.

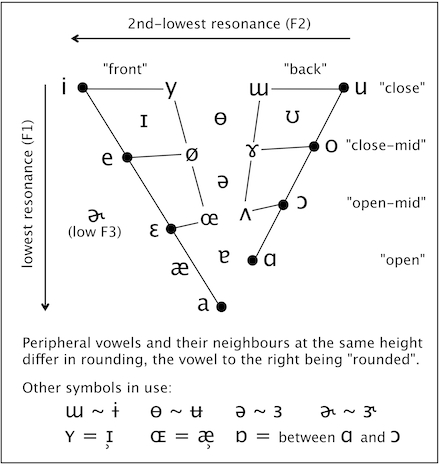

Here I have tried to stylize the acoustic space into a chart on the model of the IPA’s quadrilateral; again, clicking on the symbols gives syntheses generated by the Praat Vowel Editor.

The chart is pretty conservative. It maintains (indeed, to some extent it explains) the three parameters of “height” (or closeness), “backness” and “rounding” which all phoneticians and phonologists make use of. The black dots preserve Jones’s eight primary cardinal vowels, equally spaced on the “front” and “back” sides of the periphery. The colourless vowel schwa is still located mid-centre.

The triangularity of the vowel space is made clear. Most languages have triangular systems; Spanish, for example, has a classic five-vowel system with three corner vowels which are somewhat centralized versions of the reference/cardinal vowels i, a and u on the chart. A clearly four-cornered system like that of Finnish (with i–æ–ɑ–u) is still revealed as such on the chart.

There is a deliberate ambiguity in the situation of a on the chart. By means of connecting lines, I have preserved Jones’s allocation of a to the “front” series of cardinal vowels. But a is located centrally, below schwa. And many languages treat their single open vowel as belonging to the phonologically “back” category. The front/back ambiguity of a could be enshrined explicitly by the addition of a connecting line to the “back” series.

I’ve made slight changes to the interpretation of a few symbols. These have the general effect of reducing the number of distinct qualities, so that several symbols which have distinct definitions on the IPA chart are treated here as notational variants. These changes are mostly optional (ie the chart could be re-populated if desired), but I do think that the official IPA chart is crowded with symbols which exist not so much for good acoustic or linguistic reasons as to fill the slots implied by its tongue-space framework.

For instance, I’m not sure that languages ever contrast ɨ and ɯ; the unrounded close non-front vowel of languages like Turkish and Vietnamese is sometimes transcribed as ɯ and sometimes as ɨ. However, languages do seem to make use of a somewhat close, somewhat rounded vowel which is neither y nor u – for instance, the “compressed u” of Swedish and Japanese, the Scottish FOOT-GOOSE vowel and the “fronted” FOOT of Southern BrE. For this quality it seems reasonable to use ɵ/ʉ, and my synthesized vowel is based on the formants of John Wells’s demo of IPA ɵ.

Lastly, I’ve added the rhotic vowel ɚ, which is acoustically distinct from ə mainly in terms of its lowered third resonance (F3). It would be easy to turn the above chart into a three-dimensional vowel space with F1, F2 and F3 as its axes: each vowel could be perched on a “stick” the height of which would correspond to F3. ɚ could be included in such a space, its stick standing close to that of ə but considerably less tall than any of the other vowels’ sticks. However, the added visual complication hardly seems worth the trouble for a practical chart, so I’ve kept the space two-dimensional, and kept ɚ off it, to one side.

Great article!

Your “synthesized” sounds are OK for the open/low vowels, but sound awfully strange for the high/close vowels — like someone with a cold being strangled 🙂

Thanks! I think the upper formants of i were a bit too fierce and I’ve toned them down. The other close vowels sound no worse to me than the opener vowels. But cardinals are deliberately extreme and even human demonstrations can sound a bit ‘strangled’…

Thanks!

Would you mind posting, perhaps in a comment, the source formants you used to generate these sounds (as they appear in the final version)? I presume you have them in a file somewhere. That would be incredibly useful to me!

Thanks.

I’ve emailed them.

I enjoyed reading this. I have one complaint though. I don’t know who’s to blame here (maybe me), but every time I click on the one of the vowels in this post it takes me all the way back to the top of the page. This is a bit annoying with a long blog post like this one.

Thanks. Are you using Safari? If so, it might help if you can clear history then reload or restart.

I always thought that ɚ didn’t exist and that it was some weird symbol invented for weird reasons when a syllabic alveolar approximant would do.

@Aleph: the relationship between [ɚ] and [ɹ] is comparable to that between, say, [i] and [j]. The use of one symbol rather that the other may not correspond to any physical or physiological difference in the phone, but rather to whether it is being treated as syllabic or non-syllabic.

Thank you, dw, for letting me know! I was seriously thinking I was deaf and couldn’t hear the difference. Though, it’s not that it didn’t occur to me to think about the realtionship between [i] and [j] (or u / [w]).

Although, I should ask, which symbol describes best the American consonant?

/ɹ/

Good. I thought it was [ɻ].

I’d say it varies between [ɻ] and [ɹ]. It is indeed quite often retroflex for many North American speakers.

Thank you, Mitko! I’m glad I wasn’t imagining things. Though I do remember vaguely a post somewhere which said that neither of those symbols is 100% appropriate.

I have to strongly disagree that [ɹ] and [ɚ] are the same. I am American, and my phonetic inventory includes both of these sounds (they are allophones), at least as far as I understand. The first is an alveolar approximant, where the tip of my tongue touches the alveolar ridge. The second is an r-colored vowel, which I make in the back of my mouth. They do sound the same to me, but I only use [ɚ] word-finally, and I only use syllabic [ɹ] in words like cataract. So for me, batter act does not quite rhyme with cataract. (There are also words like serene where I can use either one, depending on how fast I’m talking.) Since they are allophones, however, for phonemic notation you should definitely pick one and stick with it.

One more thing: syllabic [ɹ] and [ɚ] definitely sound the same to me, but I don’t know whether they sound the same to everybody. One would have to see whether there are languages where these are two distinct phonemes.

I am confused. What you are describing is the same sound, as you yourself say, but they are different? Syllabicity really has nothing to do with it: I believe one could easily write [ˈlɛt̬ ɹ̩], but phoneticians and phonologists don’t because it is phonologically wrong.

Actually, some Americans definitely pronounce letter with [ɹ̩], but others use [ɚ], while I believe all Americans use some variant of [ɹ] in red. These two sounds are made in quite different ways, and I don’t see why you shouldn’t use two different notations for them. I expect phonologists can distinguish them by ear. I can’t, because they are allophones and my brain has been trained to treat them the same (the same way that some Americans can’t distinguish between [ɔ] and [ɑ], something which astounds me).

Geoff, I enjoyed this article very much. I’m always so frustrated that I can’t click on the diagrams on my iPad to hear the sounds—I assume that you use Flash to make the charts with sounds, which makes sense: it’s the easiest interface for building this kind of thing. I end up having to wait til I get back to my mac to be able to hear your point.

In your final chart, I find it surprising how “fronted” the /a/ sound feels to me. I crave a /ɐ/ spot on the chart that is in the triangle delineated by the three corners a—ɛ—ɔ that resembles the STRUT vowel of many accents. The value of /ʌ/ feels both too central (schwa-ish, if you will) and too back to be useful.

It feels like many accents of English have a GOOSE vowel sitting somewhere significantly fronter than the traditional /u/ value, and there’s no /ʉ/ value on the chart (though I suspect that this would be too fronted to be of use, too.) So we end up using broad transcriptions that are a fairly long way off from whatever “normalized,” “cardinalized,” “idealized” vowel values/symbols we decide to use.

I think you’re right in your 1962 post—these choices of Gim’s have been ossified into artifacts that are affecting the discourse in ways that are unfortunate. I feel like I’m operating so many filters when I read texts on phonetics these days, and clearly I’m operating under many of them as I try to write and discuss and teach IPA to my actor-students.

Bless you for shining your light on these topics: you bring a nice dose of science, humour and openness to the process. Thank you.

Hi Eric and thanks for the nice words. I agree with you about ɐ and have now added it to the chart. Accordingly I’ve also removed the “equivalence” of ʌ and ɐ from the original post. So we could say that many Spanish speakers, for example, have corner vowels close to the syntheses of ɪ, ɐ, ʊ.

You say that there’s no ʉ on the chart. Depends what you mean. I’m treating ɵ and ʉ as notational variants (at least until I’m persuaded otherwise), so you could put either one of them in that close-ish central position on the chart.

So does that mean that one could for standard European Spanish easily choose to use ‹ɪ, ɐ, ʊ› as standard symbols for the /i, a, u/ phonemes and wouldn’t be wrong? In fact, he or she could probably be even more correct and close to the most frequent allophone?

I would like to ask you one other thing about something that I now see you once mentioned, the unrounded allophone of the /ɒ/ in Gimsonian pronunciation? Is that really phonetically an [ɑ]? Or is it an [ʌ]? But not an [ɐ]? If you check that video about beef and beer, would it be in any way possible, given your technical prowess, to measure the sound that narrator pronounces in the word hops and several others? I would really like to know how front or back and how open or close that vowel is!

Out of curiosity, what video are you referring to? Is it a video from a different posting on this blog.

You should check the entries Morgen – a suitable case for treatment and Clearly varisyllabic. There is this weird old realization of LOT about which no one wrote except Geoff Lyndsay.

Yet I think there is a weird mislabelling of this vowel, it is not [ɑ], but rather something like [a], much fronter than the fully back PALM and I believe even closer to cardinal [a]. In the beef and beer clip the pronunciation of the word hops seems like a clear illustration.

Lucid, original and thought-provoking as always.

I suspect the “same height, same backness, different lip position” concept will have to be retained in teaching phonetics. However, the centralised appearance of unrounded vowels on your chart may not be completely irrelevant to “tongue space” in real life. Languages that contrast [i] and [y], for example, might show that “the highest point of the tongue” is less front for the rounded vowel. I wonder if we can find some MRI shots of the vowels of speakers of, say, French or German or Mandarin. Do you know if anyone’s done that? Perhaps John Coleman?

Your post made me wonder if there are any languages that contrast /ɨ/ and /y/. I bet the answer is no. I also wonder if in at least some languages that contrast /i(ː)/ and /y(ː)/ there is free variation between [ɨ(ː)] and [y(ː)] for /y(ː)/. [ɨ] and [y] sound pretty similar to me.

That was a little off-topic. Sorry. I tend to do that 🙂

Very much on-topic, Phil. Turkish has y and an unrounded close non-front vowel, transcribed either as ɨ or ɯ. I guess what constitutes ‘contrast’ depends on your phonological analysis.

I wish someone would measure both the ɯ and a of Turkish in the speech of someone with a fairly conservative pronunciation, perhaps Zeki Müren or someone like that. I would like to know how back those phones are.

Lucid, original and thought-provoking as always.

Indeed. Isn’t this one of the most majestic blogs out there? Phenomenal.

I am a Native Turkish Speaker, and I think the Turkish “a” sound is identical to the English STRUT vowel. However, there is a fronted and lowered version of it, which is quite similar to the Spanish “a” sound and is only used in Turkish for loan words. The difference between those “a” sounds can be clearly observed in the Turkish pronunciation of the word “Lavabo” /lavʌbɔ/.

Thanks for the kind words, Mitko.

It seems fair to say that the main articulatory difference between i and y is generally in the lips. But defining vowels in terms of old articulatory notions isn’t my idea of “teaching phonetics”!

I don’t know about MRI shots; real research on articulation is hard to do and relatively scarce, while acoustic research is relatively abundant and/or easy to do.

Another post that has sent a lot of what I thought I knew down the drain… Well, erm… thanks, Geoff? 😉

Seriously though, thanks for opening my eyes again… 🙂 Keep posting!

You may also be interested in using VocalTractLab for higher sound quality and better articulation control than Praat. Version 2.1 is presently out.

Your article leaves a false impression that the secondary cardinals came far after Bell’s time. They are very much part of Bell’s original 36 vowels; 18 rounded, 18 unrounded. Bell also has a more meaningful distinction for “centralized” vowels. Rather than just vowels that appear in the center of the vowel space; the mixed vowels of Bell are distinct because the tip of the tongue is raised creating a second air restriction in addition to one towards the velum.

What Bell lacks that MRIs, Xrays, and Articulation synthesizers provide is that idea low F1s come from constriction in the back of the throat, and high F1s are from wide openings in the back of the throat; not really a jaw issue as Jones implied it was. Other than this pharyngeal chamber oversight/omission, I find Bell’s articulations/drawings just as meaningful today and more instructive than Jones 4 xrays. The high front [i] is more forward than the backless Bell drawing leaves the impression of though.

Am new to phonetics but I found this fascinating

I disagree with the way /a/ is treated here. Why is it treated as a low-central vowel, and, more importantly, why are the low-front vowel [a] and low-central vowel [ä] merged as though they were the same vowel sound? The low-front vowel is the vowel used in ‘trap’ in SSBE. The low-central vowel, however, is the sound found in Australian English ‘palm’ (and in a vast number of other languages). The two are similar but not identical. And I would not use ‘ɐ’ to describe the low-central vowel, because ɐ is a little higher; it describes the vowel of ‘strut’ in certain kinds of southern English.

I do not think that the IPA vowel chart is crowded… On the contrary, I think that 42 vowel symbols are necessary to capture all the fine differences in pronunciation across languages and accents. In fact, the problem with IPA is that it doesn’t have a dedicated symbol for each ‘slot’, but uses diacritics or lowering symbols instead. Phoneticians usually avoid these marks, instead choosing their symbols not for phonetic accuracy, but for orthographic convenience, and this distorts the phonetic accuracy of their transcriptions. For those of us who are interested in phonetics (as opposed to phonemic analysis) and actually learning accents (instead of describing them on paper), the practice of using very broad transcriptions is very frustrating indeed, especially when it’s done for no other reason than avoiding diacritics.

Thank you for the article. This was very interesting!

This and your video are both great. However, do you think you could make the notational variants clickable? Maybe not for [ə ~ ɜ] or [ɵ ~ ʉ], but having sounds for the others would be useful.

It would be cool to have a version of this chart where you could click and play the sound of any wovel on the continuum, not just those points assigned a symbol. So that you could try to pinpoint languages and dialects.

At any one place of articulation there is 1 plosive, 1 fricative, 1 approximate, 1 tense vowel, & 1 lax vowel.

And 1 nasal and 1 affricate

Would you be able to share the specific data you based your vowel chart graphs on? While the use of the vowel symbols directly on the chart is good for illustrative purposes, the size of the symbols makes it something of a challenge to pinpoint the frequencies. Thanks in advance, and a very interesting post =)